Splunk lab is in progress. The initial configuration has had so much troubleshooting to make functional it’s likely to be its own project. It builds on the firewall and switching lab, so I’ve been engineering the whole lab from the ground up to make it work. It’s been fun, frustrating, and satisfying.

Progress so far:

-Splunk Enterprise on Ubuntu VM

—Dashboard Created

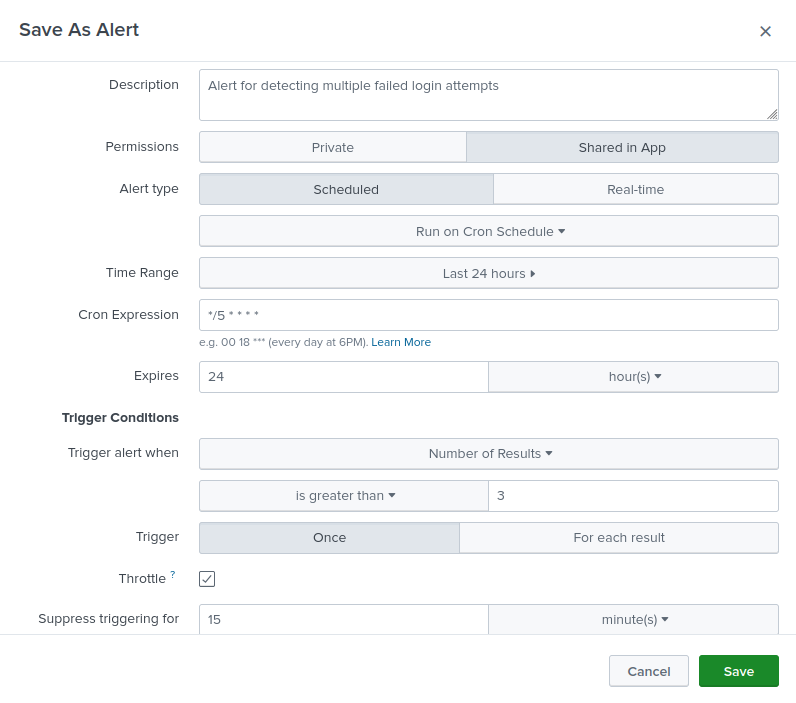

—Alerts Created

-Log forwarding agents on 2x Endpoints and counting

-Log forwarding from PA220 and Cisco 3750

-Security Policies on firewall

-VM migration to more capable machine

In progress:

-Shuffle SOAR in Docker container to integrate into Splunk

-SPL manipulation

-2x more Endpoints to add

-Firewall policy tuning

-Splunk report tuning

What’s next:

-Automated recon from Kali VM to create alerts

-Automated attacks from Kali to create alerts

-Tune firewall policy, Splunk alerts, and SOAR playbook to mitigate attacks on integrity, confidentiality, and availability of network and production.

Here are some lessons learned so far:

I used a combination of Chatgpt and Grok for instruction and troubleshooting the technology I'm not proficient in for installation and configuration of the lab. This is a good time to point out the limitations of using LLM to problem solve, and how I approached using them.

-LLM can only "solve" issues someone else has already solved.

When it comes to technical troubleshooting, the LLMs helped a lot. When I can define the problems and errors the LLM would normally have fixes that got me functional again. What this boils down to is the LLM saved me the time of googling the key terms of a problem and parsing many forum posts. Within that scope, the LLM helped me do what I could have already done, but faster.

-LLM are not good at thinking.

When it came to best practices, they helped keep me on track. I did just simply ask them what kind of lab to build and with what technologies, and the answers were always kind of backwards. They are no substitute for the will. What I ended up building was always in my mind's eye. LLM were able to help me the most with thoughts that have already occurred in another human, not necessarily new thoughts for my own niche dynamic situations.

-Coding is hit and miss

I used both LLMs to create a script that would automatically expand the disk of the VM I was using. It was useful to be able to bounce the script between Grok and Chatgpt to get them to critique the code. I noticed Grok was trained on older information (from around 2022). I have a basic grasp of how the coding works, so I was able to modify the scripts to fit my needs. This all leads me to my first solved problem:

Problem: Memory Exhaustion on VM

When I setup Ubuntu I allocated 40Gb of HD space. Splunk and Shuffle don't need a ton of space to do what I needed the lab to do, so it was sufficient for my purposes. One of the settings I glossed over was the thick vs thin provisioning. I believe by leaving it on thin Ubuntu believed it only had 15Gb of HD space. The alert I was almost out of disk space happened as I was figuring out how to install and use Shuffle with Splunk.

After some investigation I learned that although 40Gb is the max memory, it's the max memory from VMware's side. I would need to run commands on the cl in Ubuntu to utilize the full 40gb.

Exasperated as I was, I figured it was a good opportunity to practice some automation. I defined my problem and intent to the LLMs and had them critique each other's code. I will give it to Chatgpt, despite it not retaining my whole configuration in its memory, it was able to make a script that accounted for a lot of variables. With a snapshot of the VM in hand, there was no risk to just try it and see what happened.

There was one setting that wasn't configured correctly. Chatgpt was able to determine what line in the code that needed to be replaced was, and offered the substitute. After updating the script I ran it again, but the code ran differently when it ran on top of itself (for example, one of the file paths was now /var/var/ when it should have just been /var/). I loaded back to the snapshot and ran the script with the simple fix and it expanded the memory just fine.

I have an interest in understanding how the code and script works, and over time I will flesh out that understanding. What was more important that evening was to get my lab up and running. Being able to draw on others' experiences through a LLM helped me to focus on the task at hand and practice the immediately more relevant skills of security automation and orchestration.

The memory expansion broke GRUB, so I reloaded back to the last snapshot and reinstalled GRUB. I verified it worked by restarting the VM and successfully booting.

Problem: Exhaustion of Splunk Licensed Ingestion Limit

The pro of a SIEM is also its con: log ingestion from multiple sources. I want the logs for problem solving and incident response, but I don't want copious amounts of superfluous data. After setting up log collection for my L3 switch, firewall, and 2x endpoints I let it run for a few days and I noticed the most amount of logs coming from the firewall. If the trend continued it would limit the amount of end points I can add and stay within the log limit for my license of Splunk.

The inter-zone default on the Palo Alto drops all connections that don't match a security policy. On its default setting it doesn't have logging enabled. With an override I had it forwarding logs to Splunk when it dropped a session. Observing the dropped traffic helps me fine tune policies and gives me an awareness of what end points are trying to do.

Most of the blocked traffic was Ubuntu trying to update. I didn't want it to update and break anything, so I had not created a policy that allowed the connections. So, I created a security policy that blocked access to the Ubuntu servers and did not enable log forwarding. This successfully reduced hits on the inter-zone default rule and nicely tuned some of the static out the firewall traffic logs being ingested. This single policy alone has opened up the potential of adding up to two more endpoints for log ingestions; all within the limits of my license.

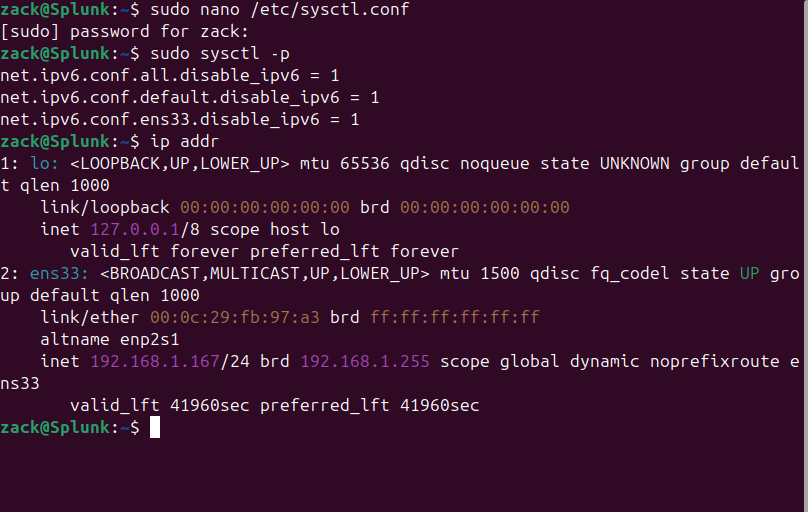

Problem: IPv6

Ipv6 has caused a myriad of problems.

One of them is simply not being able to, at a glance, see the subnet of servers different end points are trying to reach on the firewall. I could see Ubuntu trying to connect to the same range of IP addresses, so it was easier to triage on the fly. I can't remember anything defining about ipv6, I can look at the first 4 digits and the last 4, but with ipv4 I can just about recall the whole address. It just slows things down.

Additionally, I don't know if it's my firewall, but my policies to block ipv6 weren't always successful. Initially the ipv6 connections were causing a lot of static in my firewall traffic logs, so I created a policy to block them. It surprised me to see one occasionally still make it through to the inter-zone default rule.

The biggest problem is when Ubuntu updated and switched to ipv6 preference without my knowledge.

So initially I had Ubuntu update servers blocked, but then I wasn't able to apt update so I had to open the policy up to allow that traffic. When I relaunched the VM the next day none of my logs were coming through and I couldn't access the internet from Ubuntu. I did some troubleshooting and determined it wasn't the firewall or switch. Other endpoints were connecting just fine, it was just my Ubuntu VM. I was able to use a LLM to figure out how to disable ipv6 on Ubuntu through its network manager.

That got it working initially. I disabled updates through the GUI to prevent an update from pushing ipv6 again (or so I thought). A few days later I started to see ipv6 traffic coming from Ubuntu again, and near as I can tell it had updated again. Ipv6 was still switched off, but somehow it retained all of its old ipv6 information and something in Ubuntu was still trying to use that old configuration, which broke ipv4 connectivity.

With the help of LLM, I found some other configuration files and was able to clear the old ipv6 configuration permanently. I also took the time to disable it on all of the other end points too, just to get ahead of any potential problems in the future. It's been days since I've had another ipv6 connection come through my network.